Conversational AI datasets to power agent performance

By Aravind Ganapathiraju

0 min read

When building conversational agents for the contact center, we often obsess over model performance: which framework performs best, how fast inference runs, or which fine-tuning approach yields better accuracy. But there’s a deeper layer that quietly determines whether results are meaningful at all—the quality of the evaluation data.

In other words, before comparing models or architectures, we need to ask: what exactly are we evaluating on? That’s where evaluating conversational AI datasets becomes critical.

In the AI community, this is a hot topic. Companies like Galileo have created leaderboards using open-source agentic datasets such as Tau-bench, xLAM, and BFCL that cover several industry verticals. Similar leaderboards have also gained prominence in recent years beyond agentic systems, especially Artificial Analysis, focused on model comparisons. Dedicated AI evaluation systems, such as Cekura, Maxim, and Galileo, provide continuous visibility into agent performance across various domains.

Despite all this progress, there’s one area that still lags behind: the evaluation data itself. A dataset can look structured, balanced, and even comprehensive, but still fail to capture the messy, emotional, and unpredictable reality of real contact center conversations. That gap can lead to overly optimistic conclusions and painful surprises in production.

Let’s see what makes a dataset realistic, why synthetic “perfect” data can be misleading, and how to build a simple rubric for evaluating conversational AI datasets in a repeatable, practical way.

The example dataset.

Consider this sample test scenario used for evaluating an insurance agent’s performance from Galileo’s agent evaluation leaderboard:

“Hi there! I need to make some changes to our dance company’s insurance setup. We have a performance tour coming up in 3 weeks, and I just realized I need to update several policies before we leave on the 15th. I need to add my lead dancer, Sofia, as a beneficiary on my life insurance, increase coverage on our equipment policy from $50k to $75k, cancel our venue liability policy that expires tomorrow, and schedule a consultation about international coverage options. Oh, and can you check if there are any discounts for professional arts organizations? I’m also worried about a pending claim from last month’s injury—it’s claim #DNC-22587.”

At first glance, this looks like a strong example: detailed, domain-rich, and packed with realistic entities. It’s exactly the kind of input an agent might need to handle. Right? Not quite.

Why is this a problematic example?

This dataset snippet illustrates a common issue in AI evaluation, which is over-engineered data. The content looks realistic, but fails the voice test; how real people actually speak when they call customer support. Let’s break it down:

-

Overloaded request. It includes six separate actions in one utterance. Real customers rarely list all their needs upfront.

-

Not voice-like. It reads like an email or web form, not a spoken call. No pauses, no turn-taking.

-

Too polished. Perfect grammar, no filler words, typos, or ambiguity. Real calls are full of “um,” “uh,” and mid-sentence corrections.

-

Single turn. The agent doesn’t respond or clarify, which eliminates a key part of how dialogue systems are evaluated.

-

Persona mismatch. A “dance company” might be creative, but it’s atypical for a general insurance contact center dataset.

Testing conversational AI against such examples may appear to perform well, only to crumble in production when faced with real, human unpredictability. Human interactions involve noise that comes from speaking patterns related to fillers, hesitations, repeats, and corrections. Speech-to-text transcription errors only add to the problem. So, the evaluation dataset should allow for simulating such complexities realistically.

Rewriting for realism in a voice use case.

Now, let’s rewrite this example as a realistic multi-turn interaction:

Customer: Hi, uh, I’ve got a couple of things I need help with on my insurance. Is now a good time?

Agent: Of course, I can help. What’s the first change you’d like to make?

Customer: Sure. So, um, I need to add someone… my lead dancer, Sofia… as a beneficiary on my life insurance.

Agent: Got it, adding Sofia as a beneficiary. What’s next?

Customer: Right. Also, our equipment policy—I think it’s fifty thousand right now?—I’d like to bump that up to seventy-five.

Agent: Okay, increasing equipment coverage. Anything else?

Customer: Yeah, can you also cancel our venue liability policy? I think it expires tomorrow.

Agent: Cancel venue liability policy expiring tomorrow, understood.

Customer: Thanks. And, um, we’ve got a tour coming up overseas. I wanted to ask about international coverage—maybe set up a consultation?

Agent: Sure, I can arrange that.

Customer: Oh, and before I forget—are there any discounts for professional arts organizations?

Agent: I’ll check for you. Anything else?

Customer: Yeah, there’s also a pending injury claim—I think it’s DNC-22587. Could you check its status?

Now, the conversation sounds human. It includes hesitations, clarifications, and incremental intent discovery. This single example now tests turn management, memory, context tracking, and intent sequencing, key factors in evaluating how agents handle real calls.

Developing a rubric for dataset quality.

How do we formalize “good” conversational data? Below is a practical rubric you can use when designing or reviewing datasets for AI agent evaluation:

Dimension | Description | Score |

Realism | Does the scenario reflect actual customer interactions, not idealized scenarios? | 0-5 |

Fit for voice use cases | Does the dataset allow for a spoken dialogue with pauses, fillers, interruptions and other speaking artifacts? | 0-5 |

Human-centric realism | Does it allow for inclusion of emotional tone, ambiguity, or colloquial phrasing? | 0-5 |

Multi-turn interaction quality | Does the dataset allow for conversation to unfold naturally across multiple turns with clarifications? | 0-5 |

User persona realism | Does the simulated customer sound like a real person with context and motivation? | 0-5 |

Diversity and coverage | Does the dataset span a range of personas, intents, and complexities? | 0-5 |

Each score helps identify weaknesses in the evaluation data. For instance, a dataset with high realism but low diversity might train agents that perform well for one demographic but fail for others. The overall dataset quality can be computed as an aggregation of scores from individual datapoints and will range from 0-5, just like the individual dimensions in the rubric.

Applying the rubric to the original example.

Evaluating the original dance company insurance snippet:

Dimension | Score | Rationale |

Realism | 2 | Overloaded and unnatural request flow. |

Fit for voice use cases | 1 | Written like text, not spoken dialogue. |

Human-centric realism | 1 | Too polished. No filler words or imperfections. |

Multi-turn interaction quality | 1 | Single turn; no agent response. |

User persona realism | 2 | Unique, but atypical. |

Diversity and coverage | 2 | Limited variation if the dataset repeats this pattern. |

Prompt for applying the rubric.

Here’s a reusable prompt to help automate scoring dataset samples.

Prompt:

You are evaluating the quality of a dataset used to test conversational agents in the insurance domain. For the given sample

-

Score it on the following dimensions (0–5): Realism, fit for voice use cases, human-centric realism, multi-turn interaction quality, and persona realism. Fit for voice means that the scenario should be in a form that can be enacted as a human conversation.

-

If applicable, assess dataset-level diversity and coverage.

-

Provide a short justification for each score.

-

Suggest concrete improvements for realism and diversity.

Why dataset evaluation quality matters.

For technology leaders, evaluating conversational AI datasets isn’t just a technical step. It’s a strategic filter for product reality. Poorly designed evaluation data creates a dangerous illusion of readiness. A model that scores 90% on synthetic datasets might fail when faced with the unstructured, emotional nature of live interactions.

In contrast, robust and realistic datasets act like a wind tunnel for AI systems. They reveal weaknesses early, reduce false positives, and build trust in performance metrics. They shift focus from model precision to customer reliability, exactly what matters in production environments.

How Talkdesk approaches dataset quality.

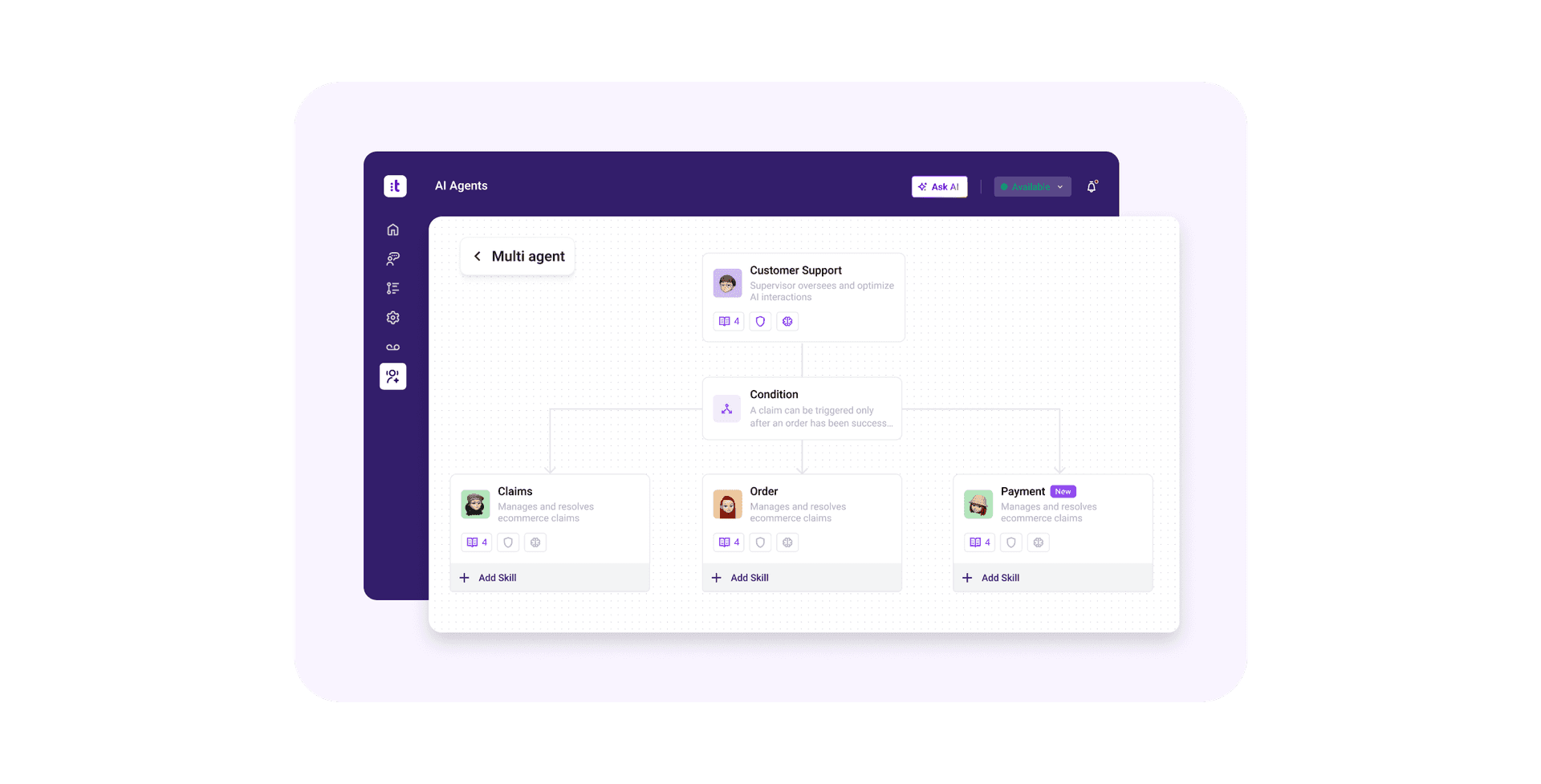

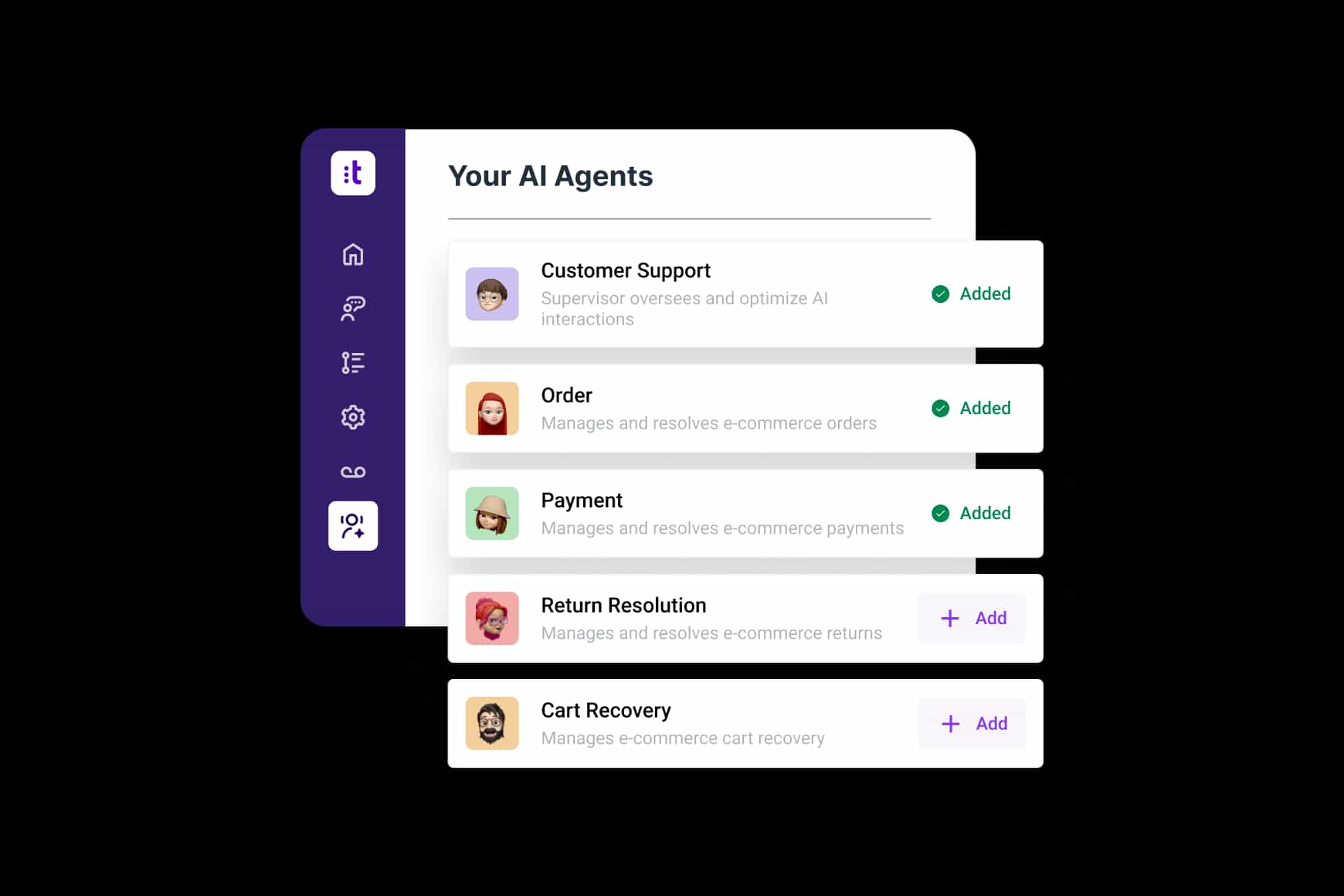

At Talkdesk, we take this principle to heart. We’ve built large-scale, realistic datasets designed explicitly for chat and voice AI agents covering a wide range of industries. This gives us confidence that the Talkdesk AI Agent platform delivers accurate, reliable performance in the environments that matter most— real contact centers, with real customers.

This foundation allows our partners and clients to move beyond “lab accuracy” and into production confidence, the ultimate test of any AI system.

Final takeaway.

In the era of applied AI, data realism is a true differentiator. Models may evolve weekly, but high-fidelity, human-like datasets remain the foundation of durable performance.

Developing datasets to evaluate AI systems is not an afterthought but the gateway to trustworthy AI. Grounding these datasets in real human behavior ensures that agents don’t just sound intelligent; they act intelligently when it matters most. That’s how we move from impressive demos to dependable deployments and from good AI to memorable customer experiences.

CUSTOMER EXPERIENCE AUTOMATION

Automate the work. Accelerate the experience.

Talkdesk CXA is built to solve one of the most persistent challenges in customer service: the tradeoff between scale and quality.