Building a secure AI agent: Combining LLM guardrails with traditional data protection

By Yunjing Ma

0 min read

As enterprises rapidly adopt LLM-powered AI agents, they’re also facing new security and data protection challenges. These new AI technologies bring unprecedented intelligence and efficiency, but also vulnerabilities, particularly in enterprise environments, where a single breach can quickly erode trust.

The limits of LLM guardrails.

When people talk about AI agent security, the first layer that comes to mind is often LLM-level guardrails. These include guardrails for content and ethical, data and privacy, operational, safety, and more. They help enforce what inputs are accepted, what outputs are generated, and how the LLM behaves.

However, it’s important to recognize the predictive nature of LLMs. Even with the most advanced prompt engineering and model tuning, LLMs can’t guarantee 100% accuracy or determinism. There will always be edge cases where the model’s output might include unintended data or interpretations.

Think of it this way: even the most cautious car driver can make a mistake if the brakes aren’t fully reliable. Similarly, if your AI agent relies solely on LLM-level safeguards, there’s still a residual risk of data vulnerabilities or policy violations.

Why traditional data protection still matters.

For enterprise customers, a single security incident, such as exposing a customer’s personal data or leaking internal knowledge, can severely damage reputation and customer confidence. Once trust is broken, it’s difficult to rebuild. Just as no one would drive a car model again after a brake failure, customers won’t continue using an AI agent that mishandles sensitive data.

So, are we out of options? Absolutely not.

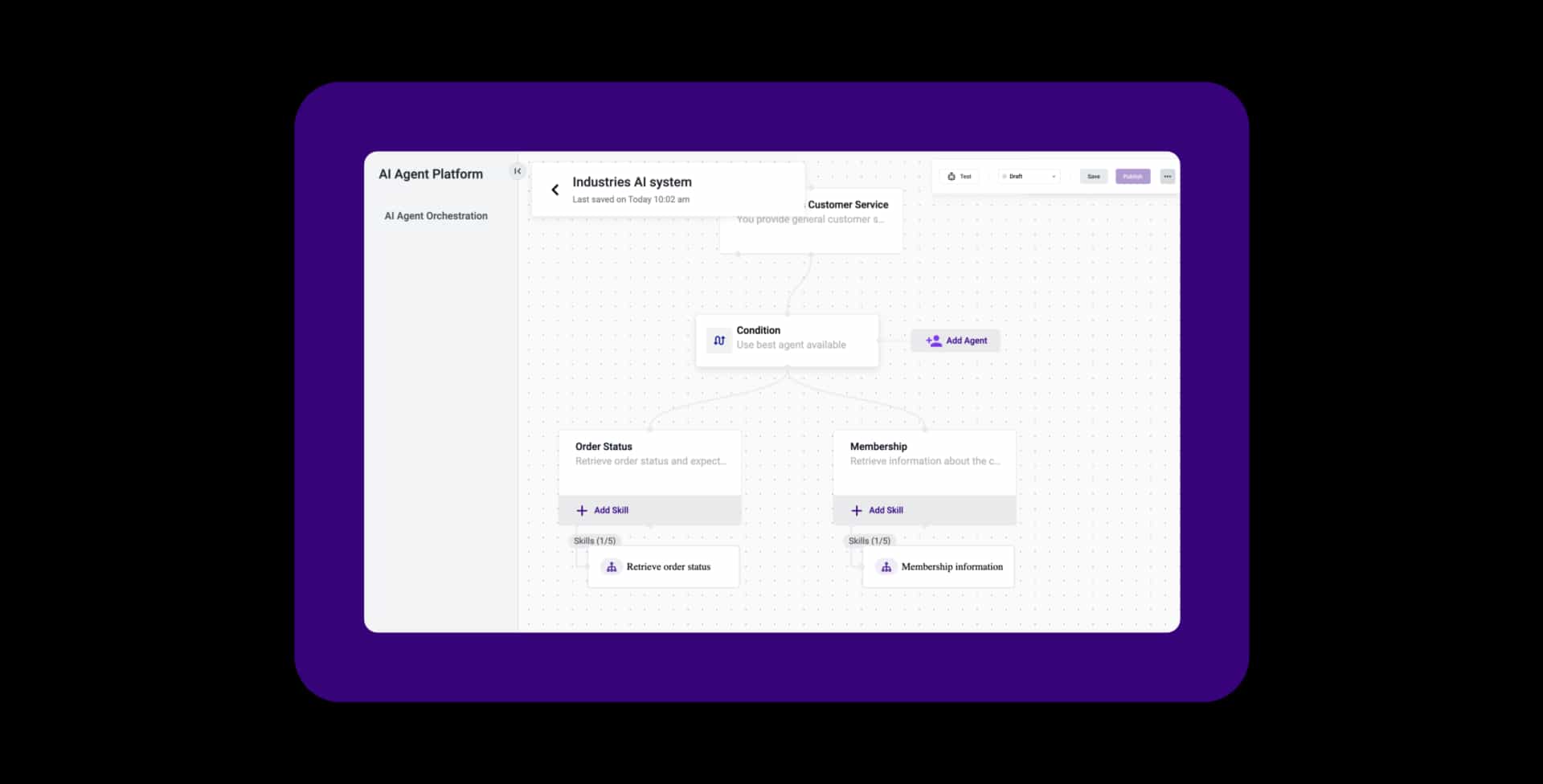

An AI agent is still fundamentally a cloud-based software system, powered by LLMs, but operating within a controlled infrastructure. This means we can (and must) layer traditional, proven data protection mechanisms on top of AI-specific guardrails.

A layered security approach: Talkdesk AI Agent example.

At Talkdesk, our AI Agent security framework combines LLM guardrails with traditional data protection controls, ensuring multi-layered defense:

LLM guardrails:

-

Ethical and content filters prevent unsafe, biased, or policy-violating outputs.

-

Privacy filters detect and redact sensitive data, such as PII or confidential information.

-

Customized constraints limit the scope of automated actions according to business rules.

-

Safety guardrails define safe boundaries to prevent harmful or unverified actions.

Traditional data protection mechanisms:

-

Access control and role-based permissions. Different roles (e.g., internal AI agents vs. customer-facing agents) have strictly defined privileges.

-

Data sensitivity and segregation. The dataset is classified by sensitivity level and strictly confined to its designated scope, preventing cross-boundary access.

-

Multi-level isolation. Data and processes are isolated at multiple levels in tenant, user, session, etc.

-

Encryption. Protects data at rest and in transit using strong cryptographic standards.

-

Data masking and tokenization. Replaces sensitive data (like PII) with masked or tokenized values for safe use in model contexts.

-

Network isolation and API gateways. Separate internal communications from external user interactions.

-

Schema and data type enforcement. Ensures encrypted or sensitive data can only be handled in expected formats, preventing accidental exposure.

-

Data audit and monitoring. Track access and usage for compliance and anomaly detection.

This layered approach means even if an LLM-generated output tries to access or expose sensitive data, infrastructure-level controls will still block or sanitize the operation.

Building trust through defense-in-depth.

By combining AI guardrails (focused on model behavior) with cloud-native data protection mechanisms (focused on system and data integrity), Talkdesk ensures that our AI agents meet the highest enterprise-grade security standards.

LLM AI may be new, but security fundamentals remain timeless. With layered guardrails, Talkdesk is committed to delivering the most secure and reliable AI agent platform for modern enterprises.